The Ultimate Guide to E-Commerce URLs

Hello and welcome to Oxford Comma Digital’s ultimate guide to e-commerce URLs! This is a HUGE topic so settle in, get a cup of tea and feel free to look at the contents to skip to what you need to focus on. At the end of it, you’ll be an e-commerce URL expert! First things first, let’s go back to basics to make sure we’re all on a similar grounding.

What is a URL?

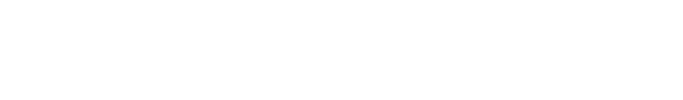

A URL is essentially an address for something on the web. It’s used by browsers to find a resource and it stands for Uniform Resource Locator. Let’s take this as an example:

In the image above, you have a blog page URL, the format of which looks like many other blog post URLs. You have your protocol, HTTP or HTTPS, which stands for Hypertext transport protocol. You then have our domain name for Oxford Comma Digital, oxford-comma.digital. Then the path, /thoughts/e-commerce-page-titles.

So that’s the main URL, however, you can have things appended to these, such as “?” and “#”, which we’ll explore in more depth later.

So now that we know what a URL is and what it’s made up of, let’s delve into some good rules to have for URLs that are applicable to all sites.

URL Best Practices

The following are best practices for all sites, whether it’s e-commerce or healthcare or anything in between. After this, we’ll delve into e-commerce-specific issues and recommendations.

URL Casing

It’s best to stick to lowercase URLs. There will be some cases where you can’t change this, sometimes file names or images on certain platforms can’t be edited and they have a capital letter in there. On a small scale or where there’s no lowercase alternative this is fine. The main problem we’re trying to avoid here is duplication.

Duplication is going to come up a lot in the following sections. It’s not always bad, but almost always, you want to avoid it and you definitely want to avoid it in the instance of having both lower and uppercase letters in your URL.

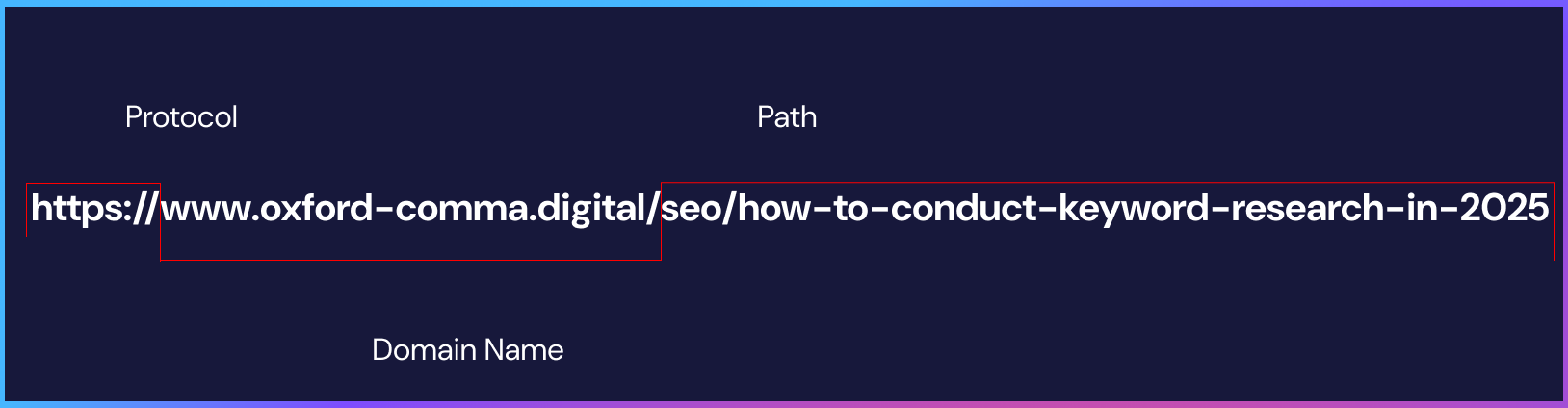

For example, if I had these URLs:

These are technically two different URLs as search engines such as Google are case sensitive but reading them, you’d expect that content to be exactly the same and what you’ll find is that some platforms will serve the same content for both of those URLs, which causes duplication.

So what you’ll want to do in these instances is add a redirect for anything with an uppercase to a lowercase (not taking into account the exceptions mentioned above) or be extremely careful about the URLs you end up creating and ensure that they only ever include lowercase characters.

Non-diacritic characters and symbols

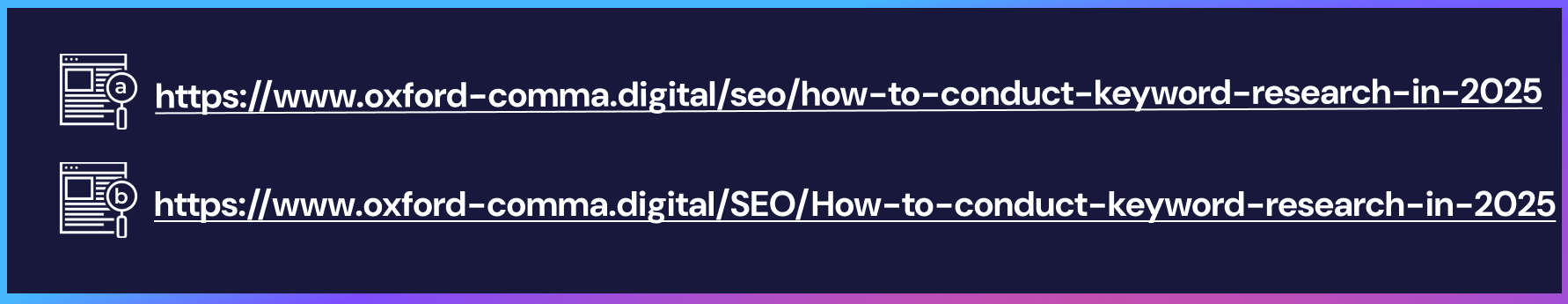

For example, a space in a URL will result in that URL looking slightly different than what you’d expect:

And so, what you end up with is two versions of the URL. Now, Google says that they see the two URLs as being the same, however, when it comes to data analysis and symbols used in bulk, this can become a huge pain.

Diacritic characters are accents on letters. Again, it’s totally fine to have an accent in a URL if you’re creating content for a language with accents, Google will equate the two versions of that URL to be the same thing but again, the data analysis can prove to be irritating.

HTTP vs HTTPS

At this point, your site shouldn’t be going across just HTTP. The additional S is for safety and it’s really important. But the message here is that again, it’s leading to duplication because these are technically two different URLs and are treated as such by search engines. So if you have a lot of inlinks pointing to HTTP versions of your URLs, you’re pointing to a duplicate. And if you redirect them all (please do) but still inlinking to them, you’re still making people wait a bit longer to access your content and making search engines discover and crawl more URLs than they should have to. If you’re an e-commerce store with a lot of products and therefore URLs, then wasting crawl budget isn’t a good option.

Trailing slash

Your site will work with either a trailing slash or no trailing slash, for example:

Most platforms will automatically redirect one version to the other, but you’ll need to make sure that’s the case. Best to choose one and stick to it. And, as with the HTTP vs HTTPS, you’ll need to be careful with inlinking. Make sure you choose one version and stick to it.

www vs non-www

Yet another example of potential duplication! Again, these are treated as two different URLs and you’ll need to make sure you choose one and stick to it, redirecting one version to another and linking only to your chosen version.

E-commerce URL Best Practices

Now we’re getting to the part of the blog where we’re delving into issues or opportunities found on e-commerce sites. But before we start, let’s take a small side-quest and look at typical page types you’ll find on an e-commerce store. Feel free to skip straight to the best practices if you already know this.

Typical E-commerce page types

Homepage: This should just be your domain name with either a trailing slash or not. Some platforms will create a URL that has /home appended at the end, and if you can redirect that to your main domain name page or stop it being created in the first place.

Product listing page: These are also known as lister pages, category pages etc., it’s where you list out your products so people can browse through them.

Product detail page: This is the page people land on if they’ve browsed your PLPs and found a product they like or landed on the page through some other method of discover like Google or social media. The page is dedicated to one specific product and its options or variants.

Product variant page: You have the choice of having specific, targeted pages for your product variants or you can have one main product page with a parameter URL for people to see variants of your product.

Pagination URLs: These are typically found from your product listing pages if you need to click to see the next page. They’ll likely be parameterised, for example: ?page=2. They’ll show the next set of products within the category. An alternative to this is infinite scroll, where the customer just keeps scrolling to discover more products. John Lewis are using a mix of both. For the user, it’s infinite scroll, you just need to scroll to see the next block of products, but a new URL is triggered so there’s a bit more control over how bots crawl the site.

Sort by URLs: These are, again, usually found from PLPs, it’s where you can choose to sort by recommended, most expensive, least expensive etc.

Attribute URLs: These are also found from PLPs. If you’re on a jeans page and wanted to see only black jeans, you can filter by those in the attribute filters, which are normally situated in a left hand navigation or up the top of the PLP, and you’ll likely be taken to a page that shows (hopefully) only black jeans. This page will typically be parameterised, though that’s not always the case.

Best Practices

OK back to the main quest. When it comes to best practice, there’s the actual best practice, and what you can ultimately do with the platform you’re using. I’m going to detail both the ideal situation as well as what’s most commonly what you’ll actually be able to do with most platforms. If you’re using a platform that allows for more customisation then the ideal might be available to you and in that case, go for it.

Controlling Filter, Sort and View By Page Indexing

#Fragments

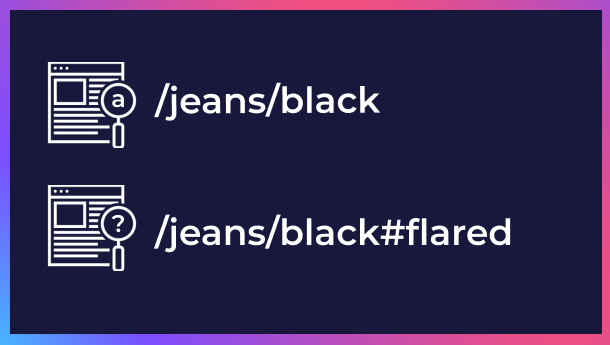

Fragments, or hashes, are absolutely wonderful and unbelievably useful. You’ll typically see them in the wild when they’re employed as anchor links and they look like this /jeans/black#flared. To see an example of them in the wild, take a look at the contents list up the top, they’re anchor links that will take you to various sections within the text.

The problem is, for the following use case, they’re not typically available to use on most platforms and you’ll end up using parameters instead, which are still useful but not as great as hash URLs.

Fragments are so useful because if you really don’t want Google to be crawling your URLs, it won’t, as Google doesn’t “see” beyond the hash. You’re probably wondering why on earth you wouldn’t want Google to see your URLs but it’s worth remembering that with e-commerce sort URLs, price filtering, attribute filtering and so on and so forth, you can inadvertently end up with millions of URLs, that you don’t want crawled or indexed. For example, I worked on an e-commerce store that had about 50k pages, that they wanted indexed. Because of lack of control to attribute filter pages, Google was crawling over a million. It can balloon VERY quickly.

Now, the decision on whether you want to index this or not is a separate decision but not one we’re making in this post, for the sake of the example, we don’t want to be indexing this URL and we don’t want Google to crawl it either. In this case, the URL jeans/black#flared is ideal because Google would see this URL as being the same one you navigated from /jeans/black/ and so it won’t impact your crawl budget having that URL on site but users will still be able to access that URL in the normal way.

To use a more extreme example, it means that users can navigate to /jeans/black/#flared&skinny&white&workwear and filter to their heart’s content without Google discovering, crawling, rendering and indexing these URLs. All of those attributes added to the end of the URL are why your page count can balloon so easily.

It’s important to use these hash URLs correctly though, if you have two separate products with a format like this /products#black-jeans, Google isn’t going to see that product URL and none of your products will be indexed. So use with caution.

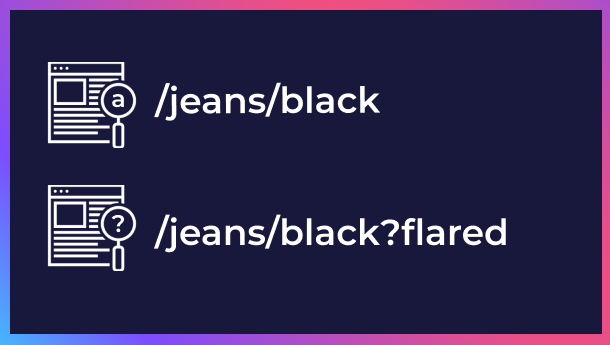

? parameterised URLs

Parameterised URLs are the next best option and the option that’s most likely to be available to you when it comes to linking to filtered or sorted PLPs. The reason why it’s not as good for this task is that Google sees these as a different URL to the main URL, for example:

/jeans/black?flared can be seen as a different URL to /jeans/black. There are absolutely reasons why a parameter is an excellent choice but for this use case, (controlling indexing and crawl depth) it’s not necessarily the best. In this instance, you’re going to have to be extremely clear with which parameters you want Google to access and index. If you don’t want the URLs to be crawled, you can define that in your robots.txt file but if you’re using parameters for any other reason, then it’s best to have certain rules around their use. For example, if you’re using parameterised URLs for pagination and you want Google to be able to see your paginated pages, then blocking just URLs with “?” in them will also block your pagination. Instead, you’ll need to have some rules, for example, for filtering you always include the syntax ?filter and for pagination you always use ?page. That way you can block one in robots.txt and not the other.

It’s also a good idea to nofollow the URLs you don’t want Google to be crawling and indexing as Google will sometimes go rogue and crawl them anyway. In fact, even with both nofollows and the robots.txt file in place, Google may still crawl these URLs, which is the main reason why a hash is better.

Linking to Product Variants

There’s a decision to be made when it comes to product variants and that’s whether you want them indexed or not. In fact, you might want to employ a flexible strategy here and have some indexed and some not indexed and that will likely depend on the demand for your product variants. For example, if I were running the Charlotte Tilbury site, I’d absolutely have a separate indexable page for each colour of each lipstick type, as the demand for their products is that granular so it’s worth it.

So if you want to index a variant the best thing to do is to have a separate URL if you can. If you can’t have a separate URL, a parameter will be best and will allow bots to find those URLs.

If you don’t want to index them, use a # if you can and a parameter if not and block the parameter type in the robots.txt file and nofollow the inlinks.

Pagination URLs

Ok, so the best practice for pagination URLs is to have URLs that look like ?page=2 or another kind of parameterised page, have self-referencing canonicals and allow Google to crawl them as that way they can discover all of your products. However, if you have a truly extensive set of categories, where you know they’re being crawled and you know that all of your products are featured on at least one of the first category pages, you might not need Google to crawl all of your pagination pages, which, realistically, it’s going to give up on after a certain point. If this is the case, you could probably hash them, though it’s uncertain whether Google will rank your category pages differently if it thinks you have 30 products vs 300 products.

That was our ultimate guide to e-commerce URLs! This is a really extensive topic that touches on many other topics, so reach out if you’d like additional detail.

Glossary

Attribute: A quality of a product. For example, a pair of trousers might be blue. Blue is an attribute of the trousers. On an e-commerce store, blue would be an attribute value under the type of colour.

Diacritic characters: This is a mark above, below or at the side of a letter, which will impact the pronunciation of the word.

Fragment URLs: These are URLs with a #. However, it’s not a hashbang URL, which looks like this #!. This will not behave in the same way as a hashed URL.

Indexing: Indexing in the context of SEO almost always means the process of getting your website or individual page URLs into Google’s database so it can be served in search engine results pages.

Inlinking: A link on your site to another URL on your site.

Platform: This can be your content management system like Wordpress or it can be an e-commerce platform like Magento. It’s the system your website is built upon.

Redirect: A redirect is where you request a URL and the server gives you another URL instead based on a rule or specific example given by someone running that website. For example, if you request a URL that’s no longer used by the organisation, they’ll want to give you a URL that provides either the same or similar information.

Robots.txt: Is a file on your website that suggests to bots on how to behave on the site, for example, not crawling a certain section or specific page.

Trailing Slash: This is a “/” right at the end of the URL.